Diabetic Retinopathy Detection

Diabetic retinopathy detection is the process of identifying and diagnosing the growth of abnormal blood vessels and damage in the retina due to high blood sugar from diabetes, using deep learning techniques.

Papers and Code

Experience with Single Domain Generalization in Real World Medical Imaging Deployments

Jan 22, 2026A desirable property of any deployed artificial intelligence is generalization across domains, i.e. data generation distribution under a specific acquisition condition. In medical imagining applications the most coveted property for effective deployment is Single Domain Generalization (SDG), which addresses the challenge of training a model on a single domain to ensure it generalizes well to unseen target domains. In multi-center studies, differences in scanners and imaging protocols introduce domain shifts that exacerbate variability in rare class characteristics. This paper presents our experience on SDG in real life deployment for two exemplary medical imaging case studies on seizure onset zone detection using fMRI data, and stress electrocardiogram based coronary artery detection. Utilizing the commonly used application of diabetic retinopathy, we first demonstrate that state-of-the-art SDG techniques fail to achieve generalized performance across data domains. We then develop a generic expert knowledge integrated deep learning technique DL+EKE and instantiate it for the DR application and show that DL+EKE outperforms SOTA SDG methods on DR. We then deploy instances of DL+EKE technique on the two real world examples of stress ECG and resting state (rs)-fMRI and discuss issues faced with SDG techniques.

SGW-GAN: Sliced Gromov-Wasserstein Guided GANs for Retinal Fundus Image Enhancement

Jan 19, 2026Retinal fundus photography is indispensable for ophthalmic screening and diagnosis, yet image quality is often degraded by noise, artifacts, and uneven illumination. Recent GAN- and diffusion-based enhancement methods improve perceptual quality by aligning degraded images with high-quality distributions, but our analysis shows that this focus can distort intra-class geometry: clinically related samples become dispersed, disease-class boundaries blur, and downstream tasks such as grading or lesion detection are harmed. The Gromov Wasserstein (GW) discrepancy offers a principled solution by aligning distributions through internal pairwise distances, naturally preserving intra-class structure, but its high computational cost restricts practical use. To overcome this, we propose SGW-GAN, the first framework to incorporate Sliced GW (SGW) into retinal image enhancement. SGW approximates GW via random projections, retaining relational fidelity while greatly reducing cost. Experiments on public datasets show that SGW-GAN produces visually compelling enhancements, achieves superior diabetic retinopathy grading, and reports the lowest GW discrepancy across disease labels, demonstrating both efficiency and clinical fidelity for unpaired medical image enhancement.

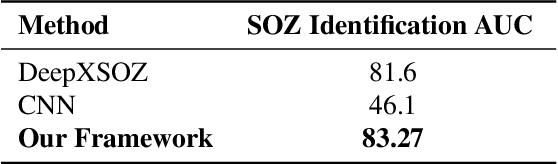

XAI-MeD: Explainable Knowledge Guided Neuro-Symbolic Framework for Domain Generalization and Rare Class Detection in Medical Imaging

Jan 05, 2026Explainability domain generalization and rare class reliability are critical challenges in medical AI where deep models often fail under real world distribution shifts and exhibit bias against infrequent clinical conditions This paper introduces XAIMeD an explainable medical AI framework that integrates clinically accurate expert knowledge into deep learning through a unified neuro symbolic architecture XAIMeD is designed to improve robustness under distribution shift enhance rare class sensitivity and deliver transparent clinically aligned interpretations The framework encodes clinical expertise as logical connectives over atomic medical propositions transforming them into machine checkable class specific rules Their diagnostic utility is quantified through weighted feature satisfaction scores enabling a symbolic reasoning branch that complements neural predictions A confidence weighted fusion integrates symbolic and deep outputs while a Hunt inspired adaptive routing mechanism guided by Entropy Imbalance Gain EIG and Rare Class Gini mitigates class imbalance high intra class variability and uncertainty We evaluate XAIMeD across diverse modalities on four challenging tasks i Seizure Onset Zone SOZ localization from rs fMRI ii Diabetic Retinopathy grading across 6 multicenter datasets demonstrate substantial performance improvements including 6 percent gains in cross domain generalization and a 10 percent improved rare class F1 score far outperforming state of the art deep learning baselines Ablation studies confirm that the clinically grounded symbolic components act as effective regularizers ensuring robustness to distribution shifts XAIMeD thus provides a principled clinically faithful and interpretable approach to multimodal medical AI.

Balancing Accuracy and Efficiency: CNN Fusion Models for Diabetic Retinopathy Screening

Dec 26, 2025Diabetic retinopathy (DR) remains a leading cause of preventable blindness, yet large-scale screening is constrained by limited specialist availability and variable image quality across devices and populations. This work investigates whether feature-level fusion of complementary convolutional neural network (CNN) backbones can deliver accurate and efficient binary DR screening on globally sourced fundus images. Using 11,156 images pooled from five public datasets (APTOS, EyePACS, IDRiD, Messidor, and ODIR), we frame DR detection as a binary classification task and compare three pretrained models (ResNet50, EfficientNet-B0, and DenseNet121) against pairwise and tri-fusion variants. Across five independent runs, fusion consistently outperforms single backbones. The EfficientNet-B0 + DenseNet121 (Eff+Den) fusion model achieves the best overall mean performance (accuracy: 82.89\%) with balanced class-wise F1-scores for normal (83.60\%) and diabetic (82.60\%) cases. While the tri-fusion is competitive, it incurs a substantially higher computational cost. Inference profiling highlights a practical trade-off: EfficientNet-B0 is the fastest (approximately 1.16 ms/image at batch size 1000), whereas the Eff+Den fusion offers a favorable accuracy--latency balance. These findings indicate that lightweight feature fusion can enhance generalization across heterogeneous datasets, supporting scalable binary DR screening workflows where both accuracy and throughput are critical.

Quadrant Segmentation VLM with Few-Shot Adaptation and OCT Learning-based Explainability Methods for Diabetic Retinopathy

Dec 20, 2025Diabetic Retinopathy (DR) is a leading cause of vision loss worldwide, requiring early detection to preserve sight. Limited access to physicians often leaves DR undiagnosed. To address this, AI models utilize lesion segmentation for interpretability; however, manually annotating lesions is impractical for clinicians. Physicians require a model that explains the reasoning for classifications rather than just highlighting lesion locations. Furthermore, current models are one-dimensional, relying on a single imaging modality for explainability and achieving limited effectiveness. In contrast, a quantitative-detection system that identifies individual DR lesions in natural language would overcome these limitations, enabling diverse applications in screening, treatment, and research settings. To address this issue, this paper presents a novel multimodal explainability model utilizing a VLM with few-shot learning, which mimics an ophthalmologist's reasoning by analyzing lesion distributions within retinal quadrants for fundus images. The model generates paired Grad-CAM heatmaps, showcasing individual neuron weights across both OCT and fundus images, which visually highlight the regions contributing to DR severity classification. Using a dataset of 3,000 fundus images and 1,000 OCT images, this innovative methodology addresses key limitations in current DR diagnostics, offering a practical and comprehensive tool for improving patient outcomes.

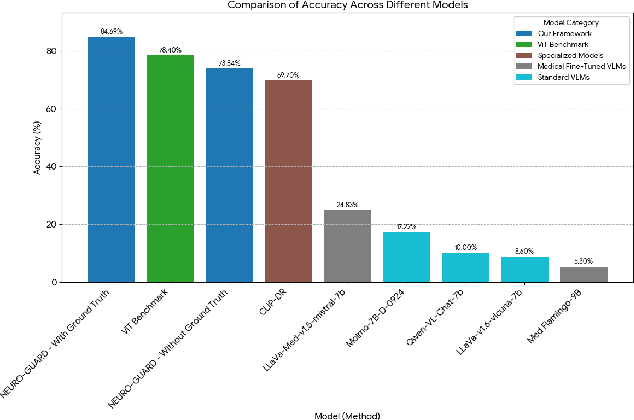

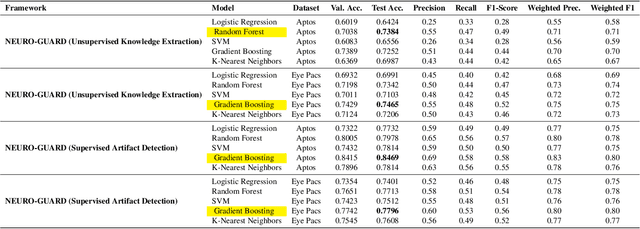

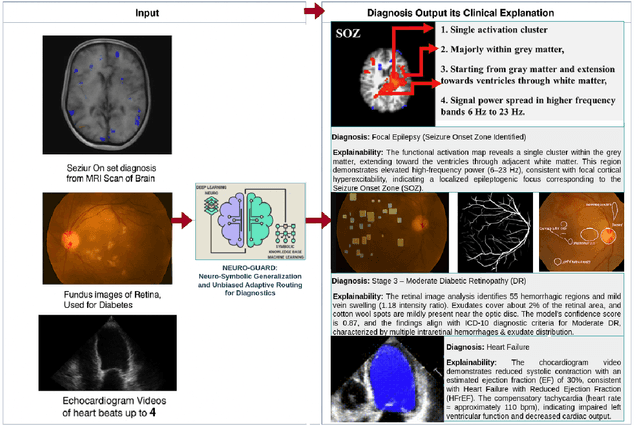

NEURO-GUARD: Neuro-Symbolic Generalization and Unbiased Adaptive Routing for Diagnostics -- Explainable Medical AI

Dec 20, 2025

Accurate yet interpretable image-based diagnosis remains a central challenge in medical AI, particularly in settings characterized by limited data, subtle visual cues, and high-stakes clinical decision-making. Most existing vision models rely on purely data-driven learning and produce black-box predictions with limited interpretability and poor cross-domain generalization, hindering their real-world clinical adoption. We present NEURO-GUARD, a novel knowledge-guided vision framework that integrates Vision Transformers (ViTs) with language-driven reasoning to improve performance, transparency, and domain robustness. NEURO-GUARD employs a retrieval-augmented generation (RAG) mechanism for self-verification, in which a large language model (LLM) iteratively generates, evaluates, and refines feature-extraction code for medical images. By grounding this process in clinical guidelines and expert knowledge, the framework progressively enhances feature detection and classification beyond purely data-driven baselines. Extensive experiments on diabetic retinopathy classification across four benchmark datasets APTOS, EyePACS, Messidor-1, and Messidor-2 demonstrate that NEURO-GUARD improves accuracy by 6.2% over a ViT-only baseline (84.69% vs. 78.4%) and achieves a 5% gain in domain generalization. Additional evaluations on MRI-based seizure detection further confirm its cross-domain robustness, consistently outperforming existing methods. Overall, NEURO-GUARD bridges symbolic medical reasoning with subsymbolic visual learning, enabling interpretable, knowledge-aware, and generalizable medical image diagnosis while achieving state-of-the-art performance across multiple datasets.

WDT-MD: Wavelet Diffusion Transformers for Microaneurysm Detection in Fundus Images

Nov 16, 2025Microaneurysms (MAs), the earliest pathognomonic signs of Diabetic Retinopathy (DR), present as sub-60 $μm$ lesions in fundus images with highly variable photometric and morphological characteristics, rendering manual screening not only labor-intensive but inherently error-prone. While diffusion-based anomaly detection has emerged as a promising approach for automated MA screening, its clinical application is hindered by three fundamental limitations. First, these models often fall prey to "identity mapping", where they inadvertently replicate the input image. Second, they struggle to distinguish MAs from other anomalies, leading to high false positives. Third, their suboptimal reconstruction of normal features hampers overall performance. To address these challenges, we propose a Wavelet Diffusion Transformer framework for MA Detection (WDT-MD), which features three key innovations: a noise-encoded image conditioning mechanism to avoid "identity mapping" by perturbing image conditions during training; pseudo-normal pattern synthesis via inpainting to introduce pixel-level supervision, enabling discrimination between MAs and other anomalies; and a wavelet diffusion Transformer architecture that combines the global modeling capability of diffusion Transformers with multi-scale wavelet analysis to enhance reconstruction of normal retinal features. Comprehensive experiments on the IDRiD and e-ophtha MA datasets demonstrate that WDT-MD outperforms state-of-the-art methods in both pixel-level and image-level MA detection. This advancement holds significant promise for improving early DR screening.

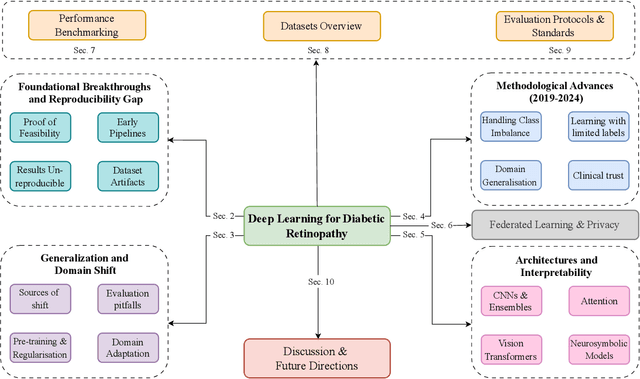

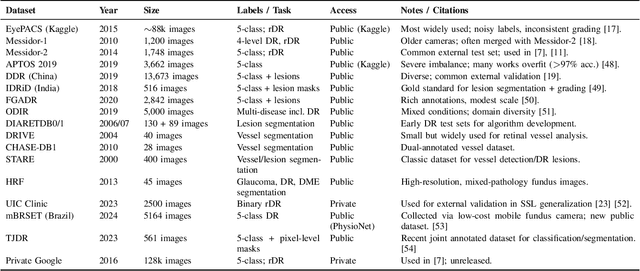

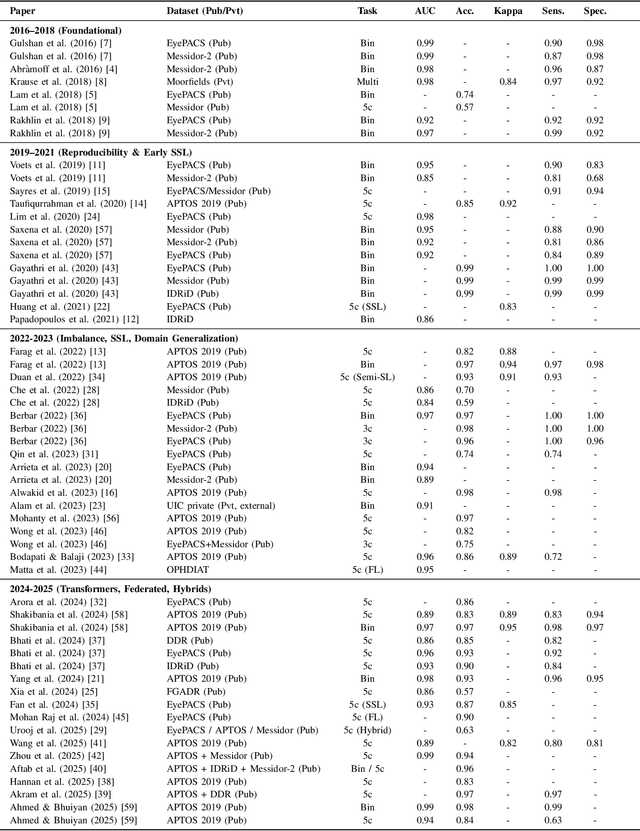

From Retinal Pixels to Patients: Evolution of Deep Learning Research in Diabetic Retinopathy Screening

Nov 14, 2025

Diabetic Retinopathy (DR) remains a leading cause of preventable blindness, with early detection critical for reducing vision loss worldwide. Over the past decade, deep learning has transformed DR screening, progressing from early convolutional neural networks trained on private datasets to advanced pipelines addressing class imbalance, label scarcity, domain shift, and interpretability. This survey provides the first systematic synthesis of DR research spanning 2016-2025, consolidating results from 50+ studies and over 20 datasets. We critically examine methodological advances, including self- and semi-supervised learning, domain generalization, federated training, and hybrid neuro-symbolic models, alongside evaluation protocols, reporting standards, and reproducibility challenges. Benchmark tables contextualize performance across datasets, while discussion highlights open gaps in multi-center validation and clinical trust. By linking technical progress with translational barriers, this work outlines a practical agenda for reproducible, privacy-preserving, and clinically deployable DR AI. Beyond DR, many of the surveyed innovations extend broadly to medical imaging at scale.

Stage Aware Diagnosis of Diabetic Retinopathy via Ordinal Regression

Nov 18, 2025Diabetic Retinopathy (DR) has emerged as a major cause of preventable blindness in recent times. With timely screening and intervention, the condition can be prevented from causing irreversible damage. The work introduces a state-of-the-art Ordinal Regression-based DR Detection framework that uses the APTOS-2019 fundus image dataset. A widely accepted combination of preprocessing methods: Green Channel (GC) Extraction, Noise Masking, and CLAHE, was used to isolate the most relevant features for DR classification. Model performance was evaluated using the Quadratic Weighted Kappa, with a focus on agreement between results and clinical grading. Our Ordinal Regression approach attained a QWK score of 0.8992, setting a new benchmark on the APTOS dataset.

A Hybrid Framework Bridging CNN and ViT based on Theory of Evidence for Diabetic Retinopathy Grading

Oct 30, 2025Diabetic retinopathy (DR) is a leading cause of vision loss among middle-aged and elderly people, which significantly impacts their daily lives and mental health. To improve the efficiency of clinical screening and enable the early detection of DR, a variety of automated DR diagnosis systems have been recently established based on convolutional neural network (CNN) or vision Transformer (ViT). However, due to the own shortages of CNN / ViT, the performance of existing methods using single-type backbone has reached a bottleneck. One potential way for the further improvements is integrating different kinds of backbones, which can fully leverage the respective strengths of them (\emph{i.e.,} the local feature extraction capability of CNN and the global feature capturing ability of ViT). To this end, we propose a novel paradigm to effectively fuse the features extracted by different backbones based on the theory of evidence. Specifically, the proposed evidential fusion paradigm transforms the features from different backbones into supporting evidences via a set of deep evidential networks. With the supporting evidences, the aggregated opinion can be accordingly formed, which can be used to adaptively tune the fusion pattern between different backbones and accordingly boost the performance of our hybrid model. We evaluated our method on two publicly available DR grading datasets. The experimental results demonstrate that our hybrid model not only improves the accuracy of DR grading, compared to the state-of-the-art frameworks, but also provides the excellent interpretability for feature fusion and decision-making.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge